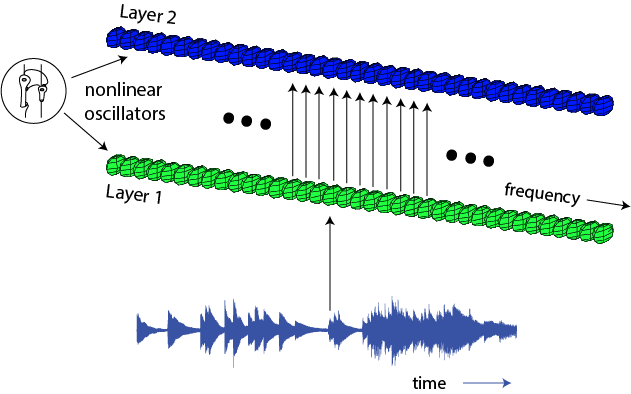

The GrFNN toolbox is a Matlab toolbox for simulating signal processing, plasticity, and pattern formation in the auditory system. Using it you can test and explore neurodynamic models of auditory perception and cognition. Gradient frequency neural networks – or GrFNNs for short – are 1-dimensional networks of nonlinear oscillators, tuned to different natural frequencies. Such networks are conceptually similar to banks of band-pass filters, except that the resonators are nonlinear rather than linear. Oscillators are organized by their natural frequency, from lowest to highest, and stimulated with time-varying acoustic signals. GrFNNs have relevance as models of active cochlear responses, auditory brainstem physiology, auditory cortical physiology, pitch perception, tonality perception, dynamic attending and rhythm perception.

On the GrFNN Wiki pages you will find tutorials, reference pages, and neural models. Some people will want to use GrFNN to program beat-tracking models, some would like to try out our cochlear or brainstem simulations, others are interested in models of musical tonality. Each of these models has its own repository. GrFNNRhythm provides a user interface to explore GrFNN models for processing musical rhythms, including a version of the model from Large, Herrera & Velasco (2015). GrFNNBrainstem contains a version of the brainstem model from Lerud, Almonte, Kim & Large, (2014). GrFNNCochlea provides tools for running and analyzing the cochlear models from Lerud, Kim, Carney & Large (under review). A repository for the tonality model from Large, Kim, Flaig, Bharucha & Krumhansl (2016) will be added soon. For the mathematical foundation and analysis of GrFNNs, see Large, Almonte & Velasco (2010) and Kim & Large (2015).

Version 1.2.1 is the current release.

A Python version is also available, developed by Jorge Herrera at CCRMA, Stanford University.

A Jorge’s GrFNN is now available.

Karl Lerud’s Auditory Stimulus Design website provides detailed instructions and examples for creating auditory stimuli with the toolbox.

References:

Large, E. W., Kim, J. C., Flaig, N., Bharucha, J., & Krumhansl, C. L. (2016). A neurodynamic account of musical tonality. Music Perception. 33 (3), 319-331. doi: 10.1525/mp. 2016.33.3.319

Kim, J. C., & Large, E. W. (2015). Signal processing in forced gradient frequency neural oscillator networks. Frontiers in Computational Neuroscience. 9:152. doi: 10.3389/fncom. 2015.00152

Large, E. W., Herrera J. A. and Velasco M. J. (2015). Neural networks for beat perception in musical rhythm. Frontiers in Systems Neuroscience. 9 (159). doi: 10.3389/fnsys.2015.00159

Lerud, K. L., Almonte, F. V., Kim, J. C. & Large, E. W. (2014). Mode-locked neural oscillation predicts human auditory brainstem responses to musical intervals. Hearing Research, 308, 41-49. doi: 10.1016/j.heares.2013.09.010

Large, E. W., Almonte, F. & Velasco, M. (2010). A canonical model for gradient frequency neural networks. Physica D, 239, 905-911.